Partition 1 | Partition 2 | ||||||

City | Date | Low | High | City | Date | Low | High |

Austin | 2012-07-01 | 73 | 89 | Austin | 2012-07-02 | 76 | 96 |

Boston | 2012-07-01 | 71 | 91 | Boston | 2012-07-02 | 70 | 82 |

San Francisco | 2012-07-01 | 58 | 67 | San Francisco | 2012-07-02 | 57 | 66 |

Seattle | 2012-07-01 | 56 | 67 | Seattle | 2012-07-02 | 55 | 67 |

Austin | 2012-07-03 | 77 | 99 | Austin | 2012-07-04 | 75 | 99 |

Boston | 2012-07-03 | 67 | 85 | Boston | 2012-07-04 | 67 | 84 |

San Francisco | 2012-07-03 | 55 | 68 | San Francisco | 2012-07-04 | 54 | 72 |

Seattle | 2012-07-03 | 53 | 65 | Seattle | 2012-07-04 | 53 | 68 |

Austin | 2012-07-05 | 75 | 94 | Austin | 2012-07-06 | 73 | 96 |

Boston | 2012-07-05 | 68 | 78 | Boston | 2012-07-06 | 69 | 84 |

San Francisco | 2012-07-05 | 55 | 64 | San Francisco | 2012-07-06 | 55 | 67 |

Seattle | 2012-07-05 | 52 | 74 | Seattle | 2012-07-06 | 55 | 77 |

Partition 1 | Partition 2 | ||||||||

City | Date | Low | High | Differ‑ence | City | Date | Low | High | Differ‑ence |

Austin | 2012-07-01 | 73 | 89 | 16 | Austin | 2012-07-02 | 76 | 96 | 20 |

Boston | 2012-07-01 | 71 | 91 | 20 | Boston | 2012-07-02 | 70 | 82 | 12 |

San Francisco | 2012-07-01 | 58 | 67 | 9 | San Francisco | 2012-07-02 | 57 | 66 | 9 |

Seattle | 2012-07-01 | 56 | 67 | 11 | Seattle | 2012-07-02 | 55 | 67 | 12 |

Austin | 2012-07-03 | 77 | 99 | 22 | Austin | 2012-07-04 | 75 | 99 | 24 |

Boston | 2012-07-03 | 67 | 85 | 18 | Boston | 2012-07-04 | 67 | 84 | 17 |

San Francisco | 2012-07-03 | 55 | 68 | 13 | San Francisco | 2012-07-04 | 54 | 72 | 18 |

Seattle | 2012-07-03 | 53 | 65 | 12 | Seattle | 2012-07-04 | 53 | 68 | 15 |

Partition 1 | Partition 2 | ||||||||

City | Date | Low | High | Differ‑ence | City | Date | Low | High | Differ‑ence |

Austin | 2012-07-01 | 73 | 89 | 16 | Austin | 2012-07-02 | 76 | 96 | 20 |

Boston | 2012-07-01 | 71 | 91 | 20 | Boston | 2012-07-02 | 70 | 82 | 12 |

San Francisco | 2012-07-01 | 58 | 67 | 9 | San Francisco | 2012-07-02 | 57 | 66 | 9 |

Seattle | 2012-07-01 | 56 | 67 | 11 | Seattle | 2012-07-02 | 55 | 67 | 12 |

Austin | 2012-07-03 | 77 | 99 | 22 | Austin | 2012-07-04 | 75 | 99 | 24 |

Boston | 2012-07-03 | 67 | 85 | 18 | Boston | 2012-07-04 | 67 | 84 | 17 |

San Francisco | 2012-07-03 | 55 | 68 | 13 | San Francisco | 2012-07-04 | 54 | 72 | 18 |

Seattle | 2012-07-03 | 53 | 65 | 12 | Seattle | 2012-07-04 | 53 | 68 | 15 |

Partition 1 | Partition 2 | ||

City | Max Difference | City | Max Difference |

Austin | 22 | Austin | 24 |

Boston | 20 | Boston | 17 |

San Francisco | 13 | San Francisco | 18 |

Seattle | 12 | Seattle | 15 |

Partition 1 | Partition 2 | ||

City | Max Difference | City | Max Difference |

Austin | 22 | San Francisco | 13 |

Austin | 24 | San Francisco | 18 |

Boston | 20 | Seattle | 12 |

Boston | 17 | Seattle | 15 |

Partition 1 | Partition 2 | ||

City | Max Difference | City | Max Difference |

Austin | 24 | San Francisco | 18 |

Boston | 20 | Seattle | 15 |

Row | City | Date | Low | High |

1 | Austin | 2012-07-01 | 73 | 89 |

2 | Austin | 2012-07-03 | 77 | 99 |

3 | Austin | 2012-07-05 | 75 | 94 |

4 | Boston | 2012-07-01 | 71 | 91 |

5 | Boston | 2012-07-03 | 67 | 85 |

6 | Boston | 2012-07-05 | 68 | 78 |

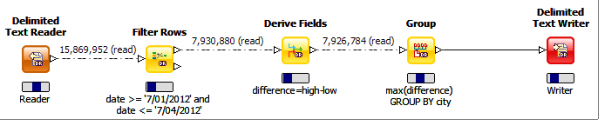

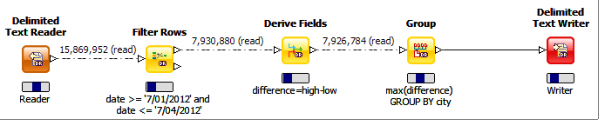

Time | Current output of Reader | Current output of FilterRows | Current output of DeriveFields | Running Max, tracked by Group (partials) | Current output of Group (partials) |

1 | Row - 1 City - Austin Date - 2012-07-01 Low - 73 High - 89 | ||||

2 | Row - 2 City - Austin Date - 2012-07-03 Low - 77 High - 99 | Row - 1 City - Austin Low - 73 High - 89 | |||

3 | Row - 3 City - Austin Date - 2012-07-05 Low - 75 High - 94 | Row - 2 City - Austin Low - 77 High - 99 | Row - 1 City - Austin Difference - 16 | ||

4 | Row - 4 City - Boston Date - 2012-07-01 Low - 71 High - 91 | Row - 2 City - Austin Difference - 22 | City - Austin Max Difference - 16 | ||

5 | Row - 5 City - Boston Date - 2012-07-03 Low - 67 High - 85 | Row - 4 City - Boston Low - 71 High - 91 | City - Austin Max Difference - 22 | ||

6 | Row - 6 City - Boston Date - 2012-07-05 Low - 68 High - 78 | Row - 5 City - Boston Low - 67 High - 85 | Row - 4 City - Boston Difference - 20 | City - Austin Max Difference - 22 | |

7 | Row - 5 City - Boston Difference - 18 | City - Boston Max Difference - 20 | City - Austin Max Difference - 22 |