Managing DataFlow Cluster Manager

This section describes:

• Starting and Stopping DataFlow Cluster Manager

• Configuring DataFlow Settings with Cluster Manager

Starting the DataFlow Cluster Manager

You can start the DataFlow Cluster Manager from the command line using the clustermgr script found in the bin directory of the DataFlow installation.

The following example provides the command to start Cluster Manager when integrating with YARN:

To start the Cluster Manager on Linux

$DR_HOME/bin/clustermgr start-history-server

--cluster.port=47000 --cluster.admin.port=47100

--module=datarush-hadoop-apache3

--cluster.port=47000 --cluster.admin.port=47100

--module=datarush-hadoop-apache3

To start the Cluster Manager on Windows

%DR_HOME%/bin/clustermgr start-history-server

--cluster.port=47000 --cluster.admin.port=47100

--module=datarush-hadoop-apache3

The following provides details of the command and options to start Cluster Manager when integrating with YARN:

• Command:

Use the start-history-server command. This command indicates to the Cluster Manager that it is starting in YARN mode and will provide reporting services for YARN-executed jobs.

• Options:

--cluster.port

Sets the port number to use for communications to Cluster Manager by other services.

--cluster.admin.port

Sets the port used by the web application within Cluster Manager. Use this port within a browser to open the Cluster Manager application.

--module

Sets the particular instance of Hadoop that is installed. For a list of supported Hadoop modules, see Hadoop Module Configurations.

During initialization, the clustermgr command determines whether a DataFlow library archive is created in HDFS. If it is not created, a script called publishlibs found in the bin directory of the DataFlow installation is invoked to create an archive of the DataFlow lib directory and push the archive file to the /apps/actian/dataflow/archive directory within HDFS.

After Cluster Manager is started, you can manage it using a web browser.

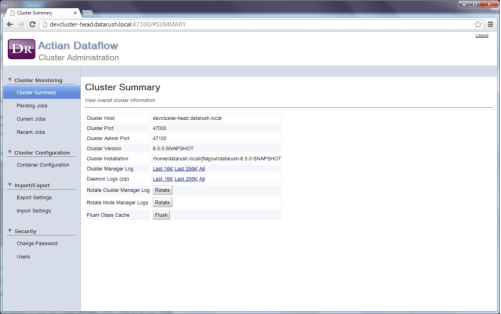

To connect to Cluster Manager, enter the URL: http:<server_name>:<admin-port>. Use the admin port specified in the --cluster.admin.port option, which is used to start Cluster Manager. The Cluster Manager home page looks like this:

Stopping the DataFlow Cluster Manager

To stop Cluster Manager

$ /opt/datarush/bin/clustermgr stop-history-server --cluster.port=47000

--cluster.host=devcluster-head

--cluster.host=devcluster-head

The following provides details of the command and options to stop Cluster Manager when integrating with YARN:

• Command:

Use the stop-history-server command. This will exit Cluster Manager.

• Options:

--cluster.port

Specifies the port number to use for communications to the Cluster Manager. Enter the same port number used when the Cluster Manager was started.

--cluster.host

Specifies the name of the host where the Cluster Manager is running. This option is not required when executed on the machine running the Cluster Manager.

Configuring DataFlow Settings within Cluster Manager

Several settings control the integration of DataFlow with YARN. Cluster Manager maintains these settings, and you can manage them in the Cluster Administration interface, a web page that lets you manage the settings and monitor DataFlow activity on the cluster.

For more information, see Monitoring DataFlow Jobs in Using DataFlow.

Cluster Administration

To open the Cluster Administration page, browse to the port you specified when you started clustermgr (47000 in the example above), then log in with the following default credentials:

• User: root

• Password: changeit

We recommend you change this root password immediately after logging in by clicking Security > Change Password.

The first view in Cluster Administration is the Cluster Summary page.

To access the Cluster Summary page

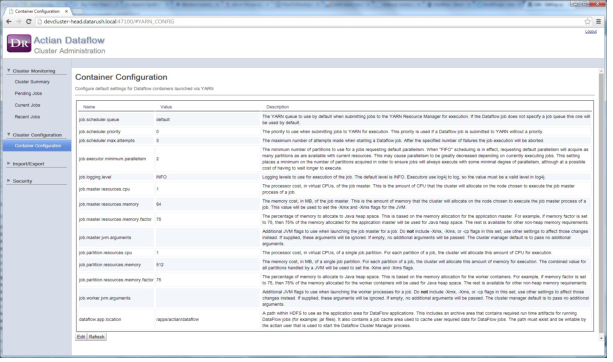

1. Click Cluster Summary on the left, then click Container Configuration.

2. To edit any of the settings, click Edit and then click Save to save any changes.

The following example displays a typical Container Configuration page.

Container Settings Overview

The following table provides the options for Container Configuration settings:

Option | Description |

|---|---|

job.scheduler.queue | The default YARN job queue to use for jobs submitted to YARN. Default: "default" |

job.scheduler.priority | The default job priority. Default: 0 |

job.scheduler.max.attempts | The maximum number of attempts allowed to start a container. If the maximum number of attempts are exceeded, the job fails. Default: 3 |

job.executor.minimum.parallelism | The minimum amount of parallelism (number of parallel partitions) that are allowed to run a job. When a job is first executed, it attempts to allocate a worker container for each wanted partition or unit of parallelism. This is defined by the job parallelism setting. The minimum parallelism is the amount of parallelism that a job is willing to accept. If the target number of containers cannot be obtained in a timely manner, the job will be allocated the minimum (or maybe more) and executed. If a job requires a specific number of partitions,, then the parallelism and minimum parallelism values should match. Default: 2 |

job.logging.level | The level used for logging a job by the application master and the worker containers. Select the required level of logging. Default: "INFO" |

job.master.resources.cpu | The number of CPU cores to allocate to the Application Master container for a job. Default: 1 Note: Configuring this setting is mandatory. |

job.master.resources.memory | The amount of memory (in megabytes) to allocate to the application master. Note: Configuring this setting is mandatory. |

job.master.resources.memory.factor | The percentage of memory allotted to the application master container to allocate as Java heap space. The remaining memory is used as non-heap space. The ‑Xmx option passed to the JVM is calculated by entering this percentage to the job.master.resources.memory setting. |

job.master.yarn.ports | The ports that are available when you set up an application master for a new job. If the port is not set or set to 0, then a random port is selected when a new job is launched that is used as the RPC port for the application master. You must provide port ranges or a comma-separated list of the individual ports. |

job.master.jvm.arguments | Additional JVM arguments to provide to the JVM running the application master. Do not specify memory settings in this option. |

job.partition.resources.cpu | The number of CPU cores to allocate to each worker container for a job. Default: 1 Note: Configuring this setting is mandatory. |

job.partition.resources.memory | The amount of memory (in megabytes) allotted to each worker container for a job. Note: Configuring this setting is mandatory. |

job.partition.resources.memory.factor | The percentage of memory allotted to the application master container to allocate as Java heap space. The rest of the memory is used as non-heap space. The -Xmx option passed to the JVM is calculated by entering this percentage to the job.master.resources.memory setting. |

job.worker.jvm.arguments | Additional JVM arguments provided to each JVM running worker containers. Do not specify memory settings in this option. |

dataflow.app.location | The path to the HDFS-based directory for DataFlow application usage. For more information about the application location, see the installation requirements section above. |

For information about using DataFlow Cluster Manager, see Using DataFlow Cluster Manager in the Using DataFlow Guide.

Last modified date: 01/03/2025