Controlling Executor Settings

Each distributed graph executed on the cluster may have different requirements. For example, one graph might use more memory than another, or you can alter logging levels between different executions of the same graph.

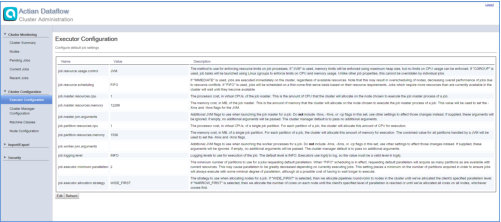

Per-job configuration is managed through the EngineConfig object used when executing the graph. These can be set within a program, overriding the configured defaults. Default values are specified within the Executor Configuration page. These settings are "live" and will take effect when the next job is run.

There are also a few settings that are applied to all executors started on a node. These are values from the node manager and potentially may not have a single valid value across all nodes. Examples are the JVM used to launch the executor or the working directory for the executor process. These settings are also "live" and will take effect when you run the next job.

Scheduling a Job

Typically, DataFlow allows the cluster to schedule the jobs on the basis of the advertised resources on nodes and the declared costs of jobs. Jobs are processed in a first-in, first-out order, waiting until sufficient resources are available in the cluster to execute the job. Jobs that specify the default parallelism will acquire all the available cluster. The number of partitions acquired must meet the minimum parallelism requirement specified when the job is run; job.minimum.parallelism provides a global default for this value. The job will wait until the threshold can be met.

To allow the cluster to schedule new jobs without delay, set the job.resource.scheduling to IMMEDIATE. This configuration is not recommended because the jobs compete for the same resources on nodes, and the performance may degrade. Therefore, this configuration is not generally recommended.

Last modified date: 01/03/2025