Tuning for Concurrency

The number of concurrent connections that a given VectorH instance will accept is governed by the connect_limit parameter, stored in config.dat and managed through the CBF utility. But there are usually more connections than executing queries, so how are resources allocated among concurrent queries?

By default, VectorH tries to balance single-query and multi-query workloads. The key parameters in balancing such workloads are:

• The number of CPU cores in the VectorH cluster

• The number of threads that a query is able to use

• The number of threads that a query is granted by the system

• The number of queries currently executing in the system

The first query to run in an installation is allowed to use as many threads as it is able to, up to the system-wide limit defined by max_parallelism_level, which defaults to all of the cores in the cluster. In this way, an “expensive” query will run on as many threads as can be executed on all of the CPU cores in the cluster, if the system is otherwise idle.

Starting from the second concurrent query, VectorH tries to evenly share the resources in a cluster among new queries, so that, for example, if there are five queries running in a 100-core system, then each query will be allocated up to 20 threads (if it can use them). This CPU allocation strategy continues (for example, the 20th query will be allocated 5 threads) until each new query receives only one thread per node. So for a cluster with N cores, the (N/2) + 1 query will execute on only one thread per node; for example with 100 cores, the 51st concurrent query will execute with only one thread per node.

The query, however, is not physically tied to execution on a specific machine or a number of cores, once the execution plan has been generated. The operating system controls how each query thread is given execution time on the CPUs in the machine, and query affinity is not used.

In fact, by default the number of cores in a system is over-allocated by 25% so the above calculation would actually operate on a maximum of 125 cores, rather than 100.

Although for the first few queries this thread allocation method leads to over-subscription of the number of threads versus available cores, if the query load is fairly constant this method results in a stable situation with properly divided resources as early, resource-heavy queries finish and later queries using fewer threads start. The operating system handles the initial over-subscription, but for concurrency tests the first and last N queries (where N is the level of concurrency) should be discarded from the test results to allow for test startup and shutdown lead and lag time.

If you know from the outset that you want to optimize for concurrent query execution, then you can constrain the early queries to use no more than N threads, even where they would otherwise have used more, in order to more evenly spread the CPU usage out across the queries (since default strategy gives greater weight to the “early” queries within a set). To do this, you can add a clause to the end of the SQL statement like this:

SELECT *

FROM lineitem

WITH MAX_PARALLELISM_LEVEL = 12

Alternatively you can change the installation default setting to affect all users and all queries, by changing the vectorwise.conf file and restarting VectorH.

Finally, you can also change the setting for all queries in a session by executing this command at the start of the session:

CALL VECTORWISE (SETCONF 'engine, max_parallelism_level, ''12''')

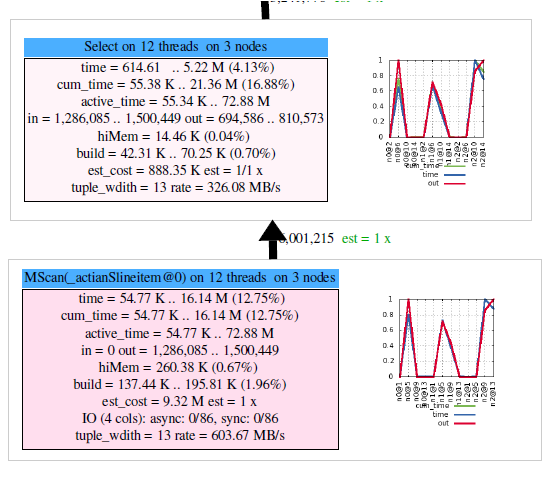

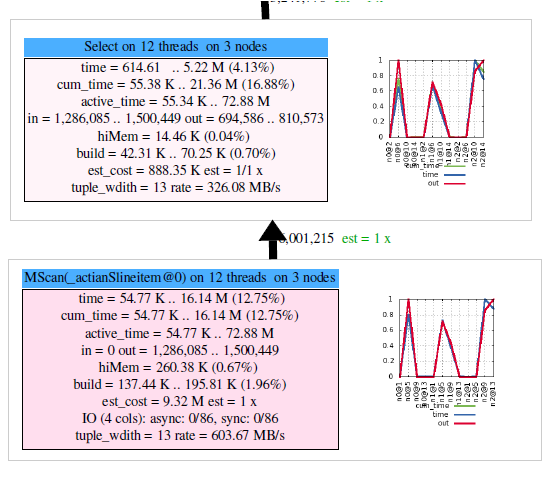

For any given query, you can see how many threads it has been allocated by looking at the query profile, a fragment of which (created by the x100profgraph tool mentioned later) is illustrated here. This query is using twelve threads in total over three nodes:

The number of threads that a query can profitably use is also influenced by the number of partitions in the main tables that the query accesses, since a thread is used to read each partition of a table. So, it is a good idea to have large tables partitioned into a number of chunks that is equal to the number of threads that you would like most queries to use. A sensible default here is 50% of the number of cores in the system.